Tweet Analytics for Disaster and Calamity Management

INFO 523 - Fall 2023 - Project Final

Introduction:

In times of disasters, individuals frequently turn to social media platforms to communicate information regarding required aid or incidents.

Twitter provides an unprecedented opportunity to access a vast amount of information and gain insights directly from those affected by disasters. Harnessing of Twitter’s potential is crucial to enhance disaster response and ensure public safety in critical situations.

Goal and problem statement:

Goal- In the face of dynamic disasters, a proactive approach to information is essential. Traditional methods can lag, hampering swift responses and resource allocation. Validating Twitter-shared information is critical to prevent panic and confusion among affected communities.

Problem statement- Conduct classification on tweets during crisis, validate tweets, and deliver accurate information to disaster management teams, thereby contributing to the smooth functioning of rescue operations and boosting the safety and resilience of communities during critical events.

Execution of plan:

- Created a new streaming pipeline- Used NTscraper to create a Nitter object which uses Beautiful soup in the back end to fetch tweet details such as “text”, “username” and statistics such as “likes”,“comments” and “retweets”.

- Model building- Used LSTM model to build a fake news classification model and give us the relevant and important details corresponding to disaster management.

- Model training- Used Kaggle dataset to perform model training and get accuracy scores.

- Model testing- Use real time data based on hashtag- “#forestfire” and location set to near “USA”.

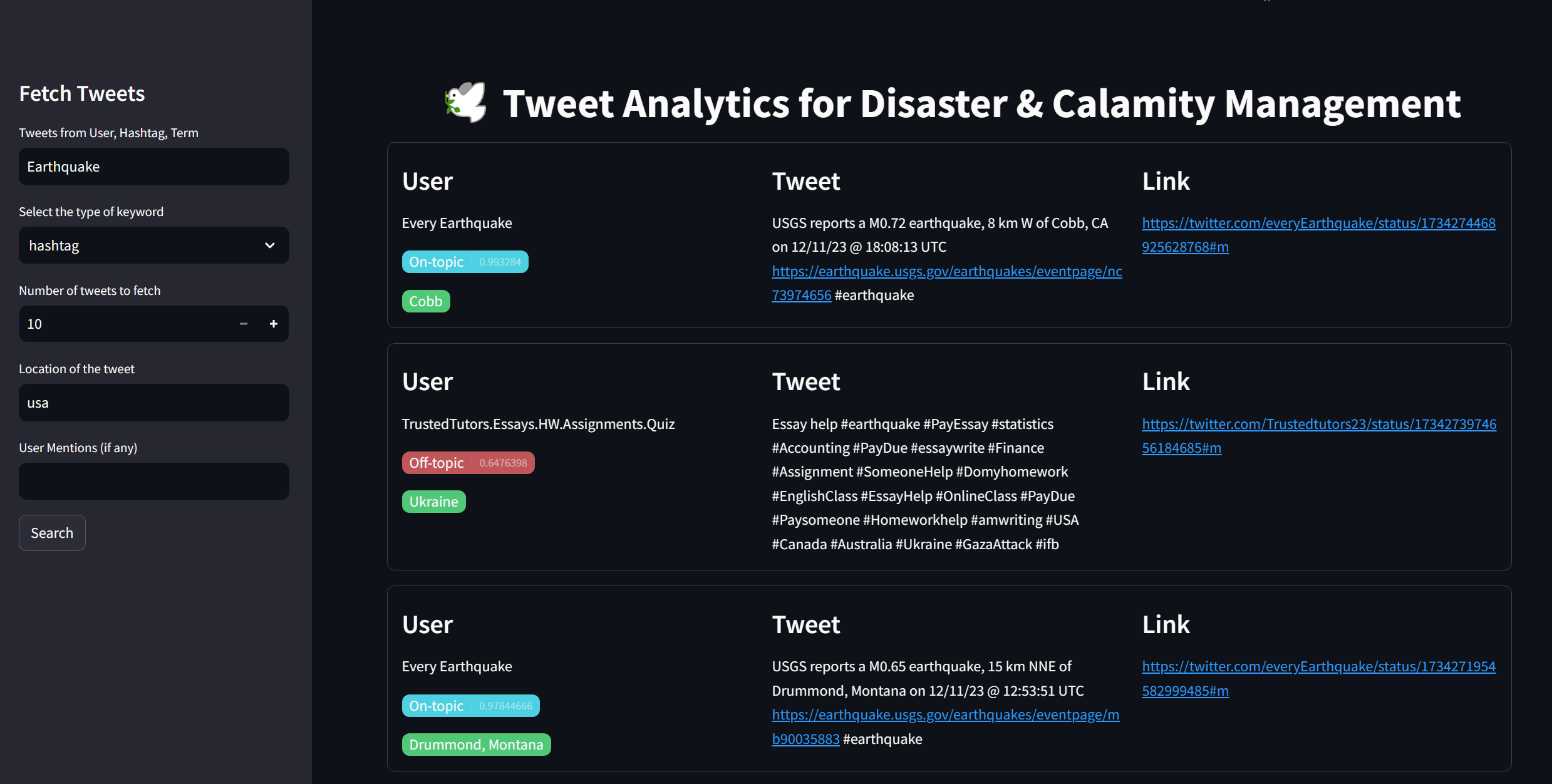

- GUI creation- Integrate a search bar for input of hashtag, after which the model fetches the data and performs classification. The classified real tweets are displayed with tweet link, location (if available) and the user.

Data:

Twitter API enables developers to fetch tweets in real-time using keywords. Since the previous month, twitter had revoked the access to pull tweets in free tier.

To get the Real-time tweets, we resorted to creating and utilizing a Nitter instance from a web-scraping based package. The tweets are fetched based on type of disaster (hashtag) and location.

These tweets will be then analysed in real-time, annotated with insights generated from our modeling process

The modeling required Fake Tweet Classification dataset from kaggle is used for classification of tweets as real or fake.

The model trained on this dataset was utilized for classifying and segregating the tweets to filter out spotlight tweets.

Our analysis plan involved exploring the dataset for suitability of the training. The insights will be shown in the next slides

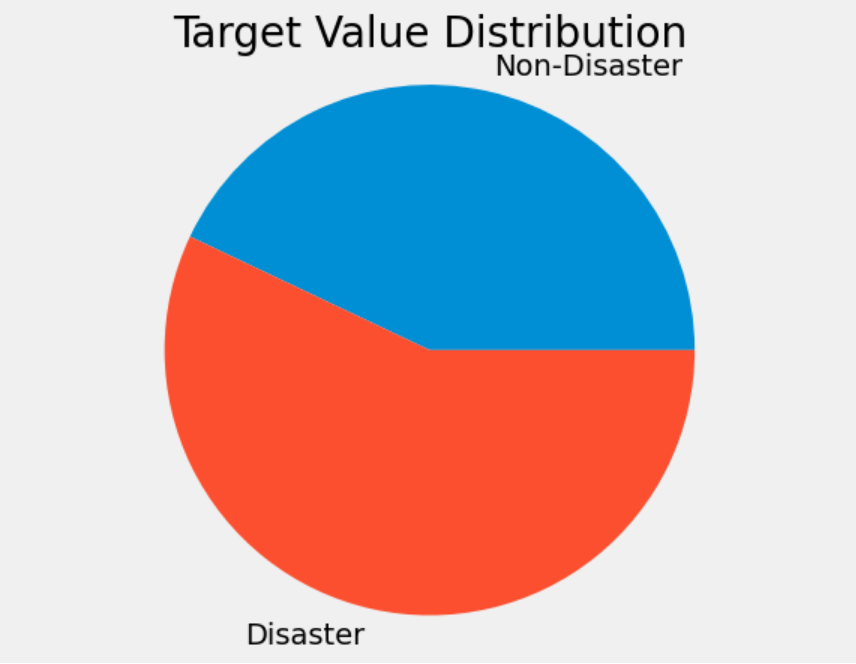

Pie Chart:

This is the target variable distribution of training dataset. With a fairly even distribution, our model had a high chance of reliable training outcomes.

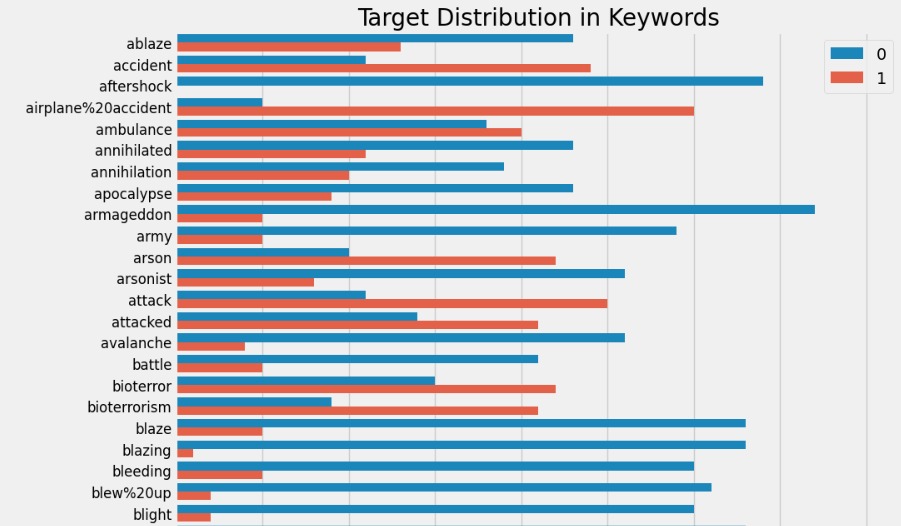

Bar Plot:

The main unique keywords that fall under the tweets tagged as “disaster tweets” has the following distribution. It is evident that tweets that talk about the disaster have really focuses terms according to the disaster.

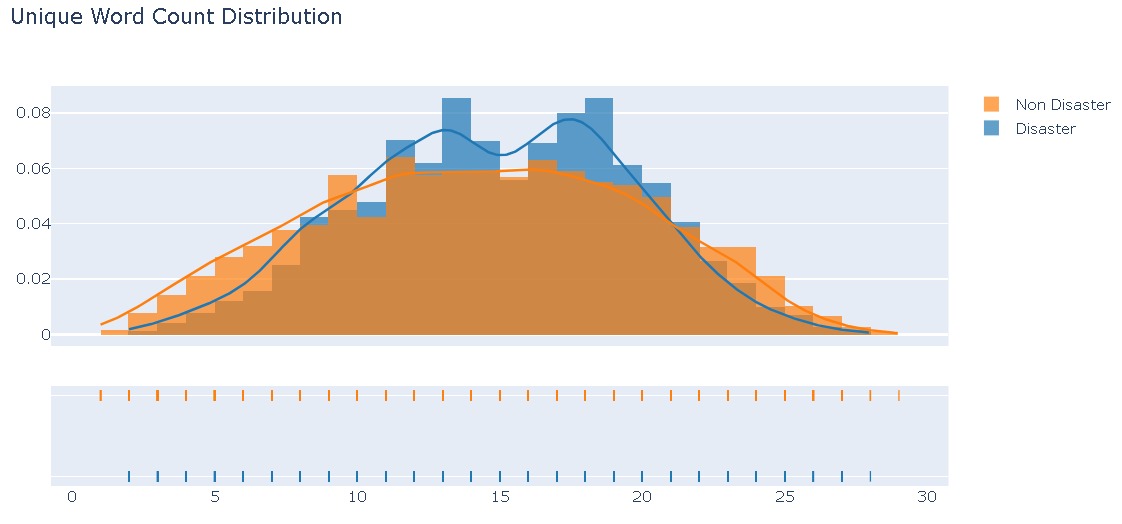

Density Plot:

With both distribution of the categories not being significantly different from an ideal normal distribution, we had the notion that the model will receive sufficient learning tokens

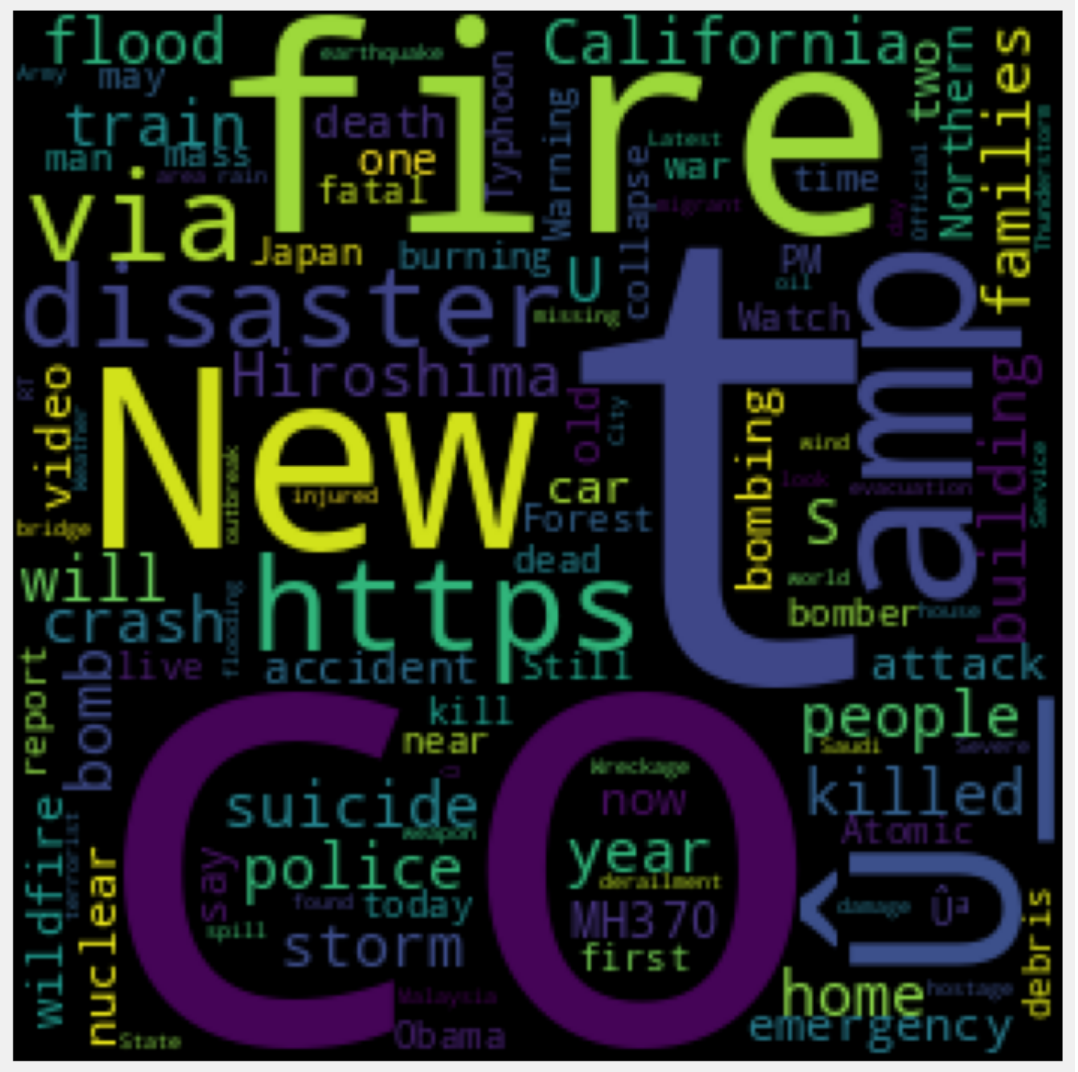

Word Cloud:

This is the wordcloud from the combined tweets of the dataset, for the “disaster” class.

Training Outcomes

BERT

First the dataset tweets were broken down through tokenization. These tokens can be individual words or subwords, phrases, or even characters, depending on the specific tokenization technique used. The primary goal of tokenization is to facilitate natural language processing tasks by converting textual data into a format that can be easily handled by algorithms and models.

Training BERT was computationally expensive, and well as memory intensive. The accuracy score for BERT was 97% on training and 82% on testing.

LSTM

LSTM on the other hand was not memory intensive, although it was fairly heavy to train, it was able to achieve a great learning curve. It achieved a training accuracy of 83% and testing accuracy of 80%.

When both the models were tested on the real-time set, LSTM provided better results compared to BERT. Owing to the fact that some fake tweets which contain the main keywords, can be misclassified due to the attention mechanism.

Outcome

Results:

hash_earthquake:

Results:

hash_flood:

Results:

hash_hurricane:

Live Demo:

Now, we are going to demonstrate our project in action.

Challenges Faced

We were not able to get access to fetch tweets with twitter developer API, because of the revoked access of free tier developers. This was later improvised using a web scraping object

There were a greater need for computational power, as text based models are computationally heavy. We utilized online resources and GPU to train the model and load them.

Response time is a greater requirement when it comes to tools like disaster monitoring systems. With heavy models running in the background, memory optimization and caching plays a great role.

Conclusion:

It has been a constant effort of the developer community to utilize twitter and generate analysis to manage/monitor disasters. However, there are some challenges when considering social media as an information source for disaster response. In particular, social media streams contain large amounts of irrelevant messages such as rumors, advertisements, or even misinformation.

This project is an improvement to previously attempted disaster tweet monitoring systems hosted by Google (Tensorflow and Kaggle competitions). What we achieved on top of the previous attempts, is the improved model scores and higher analytic insights on the location tracking.

This particular attempt can serve as a derivation for improve intelligence received during a crisis, and can enable intelligent officers to access calls for help quickly.